Helidon MCP + Ollama Integration

A comprehensive integration of Helidon microservices with Model Context Protocol (MCP) and Ollama for AI-powered development workflows. This project enables seamless AI model interactions within Java microservices architecture.

Overview

Helidon MCP + Ollama Integration is a hands-on engineering project built to explore how Java microservices can directly integrate with Large Language Models.

The system combines Helidon’s Model Context Protocol (MCP), LangChain4j, and Ollama to create an intelligent backend capable of reasoning over live data sources.

Objective

To design a self-contained AI microservice that:

- Runs fully on local infrastructure (no external API dependency)

- Connects Java APIs to LLM reasoning through MCP

- Demonstrates dynamic tool invocation with Helidon MCP

- Uses Ollama for fast, private inference on models like Llama 3 and Qwen 3

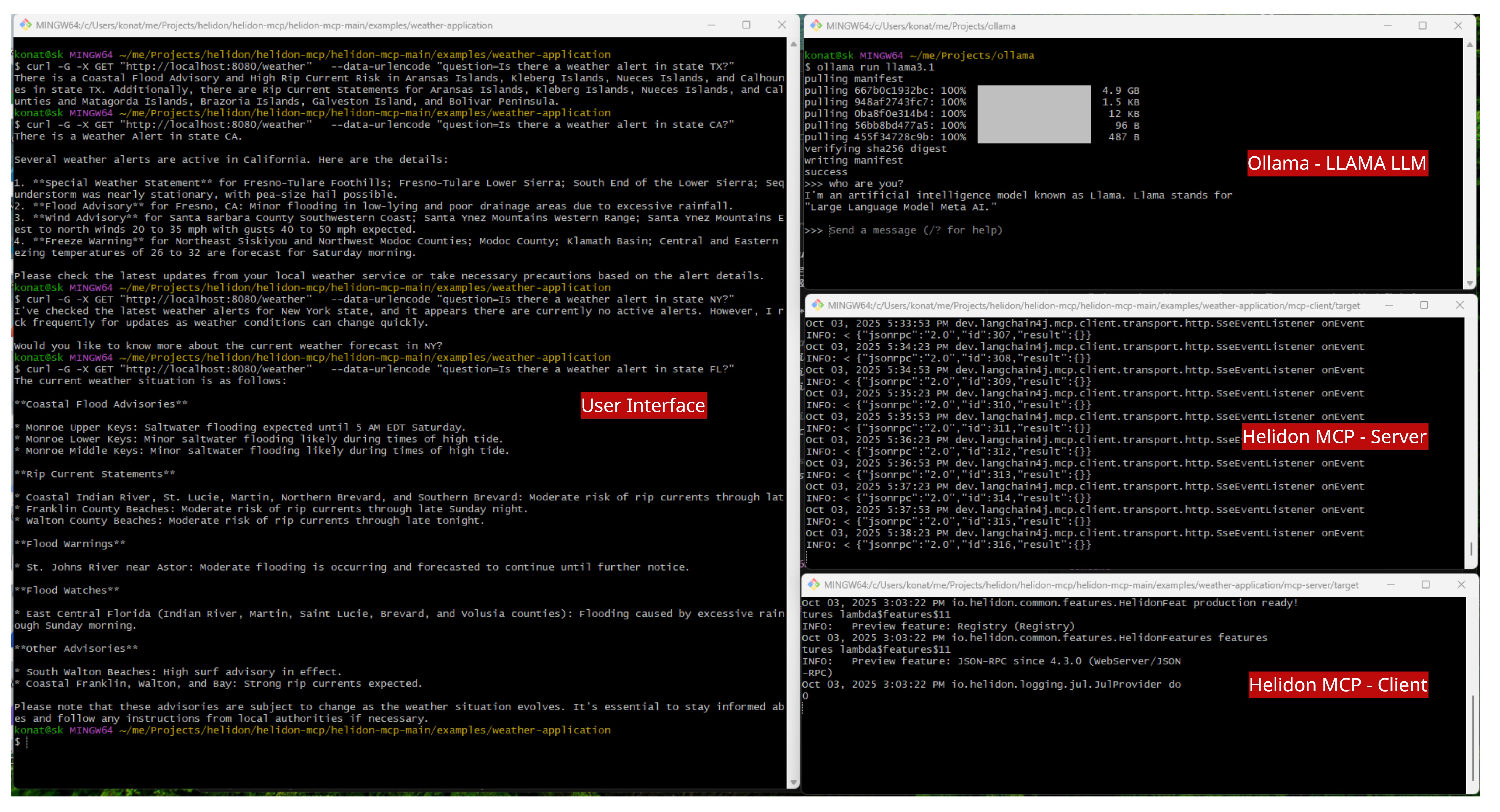

System Architecture

[User Interface]

↓

[Helidon API Layer] → LangChain4j (AI Service)

↓

[MCP Client] → MCP Server → External API (Weather)

↓

[Ollama LLM] (Llama 3 / Qwen 3)

Components

- Helidon MCP Server: Exposes domain tools (e.g., fetching live weather data).

- Helidon MCP Client: Connects to the server and registers those tools with LangChain4j.

- LangChain4j: Manages prompts, memory, and LLM reasoning.

- Ollama: Runs a local LLM (Qwen or Llama) without cloud dependency.

- Helidon REST API: Provides the

/weather?question=endpoint for querying in natural language.

Key Features

- Java 21 / Helidon 4 microservice architecture

- Fully local inference via Ollama

- Dynamic MCP Client–Server communication

- Plug-and-play tool architecture (add new APIs easily)

- Secure, low-latency AI orchestration

🤖 AI Model Integration

- Ollama Integration: Direct connection to local AI models for privacy-focused AI operations

- Model Context Protocol: Standardized communication between services and AI models

- Multiple Model Support: Seamless switching between different AI models and providers

🚀 Helidon Microservices

- Lightweight Framework: Leveraging Helidon’s efficient microservices architecture

- Reactive Programming: Non-blocking, asynchronous processing for optimal performance

- Cloud-Native: Built for modern cloud environments with container support

🔧 Developer Experience

- Easy Setup: Simple configuration and deployment process

- Comprehensive Documentation: Detailed guides and examples

- Extensible Architecture: Plugin-based system for custom integrations

🔧 Tech Stack

| Layer | Technology |

|--------|-------------|

| Frontend | Web Client / REST Consumer |

| Backend | Java 21 + Helidon 4 |

| AI Orchestration | LangChain4j |

| AI Engine | Ollama (Llama 3 / Qwen 3) |

| Integration Protocol | Model Context Protocol (MCP) |

Getting Started

Prerequisites

- Java 17 or higher

- Ollama installed locally

- Docker (for containerized deployment)

Quick Start

The UI, Helidon MCP Server, Helidon MCP Client, Ollama running Llama. You will need 4 terminal windows, I know they are a lot, but this is demonstarte the overall flow.

- Run Ollama

ollama run llama3.1 - Build the whole weather application from the weather-application directory

$project root> mvn clean package - Run MCP Server application.

java -jar mcp-server/helidon-mcp-weather-server.jar - In another terminal, run the MCP Client application.

java -jar mcp-client/helidon-mcp-weather-client.jar - Example query: in another terminal, test the application response.

curl -G "http://localhost:8080/weather" \ --data-urlencode "question=Is there a weather alert in state TX?" Response: There is a Coastal Flood Advisory and High Rip Current Risk...

Summary & Key Takeaways

- MCP Client → entry point exposing /weather API, connects Ollama + MCP.

- MCP Server → provides weather tools (imperative or declarative).

- Ollama → runs the local LLM (LLaMA, Qwen, Mistral).

- Demo → queries flow User → API → MCP Client → MCP Server → Weather API → LLM → back to User.

💡 Takeaway: LangChain4j + Ollama + MCP + Helidon = a powerful recipe for AI microservices that are local, extensible, and production-ready.

Next Steps

The next phase of this project extends the core architecture to build a full AI data exploration platform:

- Add Helidon MCP clients for Elasticsearch and MongoDB

- Create a Helidon MCP domain server for enrichment and rule-based tools

- Integrate an Angular dashboard for real-time visualization

- Expand local model coverage with Mistral, Gemma, and domain-tuned variants

- Introduce memory persistence for contextual chat sessions

- Package the entire system as a Docker Compose deployment

Each extension moves the system closer to a fully self-contained, enterprise-ready AI backend stack—capable of reasoning, retrieval, and visualization, all in Java.

Repositories

- GitHub

This project represents the future of AI-integrated Java applications, combining the power of modern microservices with cutting-edge AI capabilities.